Developing Langchain Agents with the MinIO SDK for LLM Tool-Use

In my previous article on Langchain, I explored the use of the “community S3 loaders”, while useful, offer limited functionality. Here we delve into the development of customized tools (focusing on MinIO object upload for this demonstration) and their integration with Large Language Models (LLMs) through Langchain Agents and Executors. This demonstration showcases the process of uploading objects to a bucket, leveraging the MinIO Python SDK and Langchain as the foundational tools. This exploration opens up new possibilities, moving beyond basic loaders to a more versatile and powerful implementation that enhances the capabilities of language model-driven applications.

This strategic move towards combining sophisticated language models with robust data storage capabilities, is an evolution that enhances the functionality of language-driven applications, providing a pathway to advanced tool utilization with Large Language Models (LLMs). Utilizing MinIO's secure and scalable object storage in conjunction with Langchain's ability to leverage the full capabilities of LLMs like OpenAI's GPT, developers can create systems that not only mimic human text generation but also carry out complex tasks, elevating the efficiency of data management to new heights.

Langchain serves as the critical interface that translates human instructions into the operational language of machine intelligence. Envision it as a sophisticated intermediary, capable of interpreting user commands and orchestrating a range of activities from data organization within MinIO's structures to the detailed analysis of data sets. This capability effectively converts the theoretical prowess of LLMs into tangible, functional assets within the developer's toolkit, allowing for the crafting of advanced solutions that were once considered futuristic.

In this guide, we prioritize practicality, steering towards actionable development practices. We invite developers to embrace the potential that lies in the union of Langchain and MinIO SDK, to not only innovate but also to redefine the boundaries of what can be achieved with the intelligent automation of today's digital tools.

The source code and detailed documentation to accompany this exploration can be found here. This notebook provides the minimal and necessary resources to get started on your journey with Langchain and MinIO, offering a hands-on experience to deepen your understanding and skills in creating intelligent, data-driven applications.

Enhancing Conversational Agents with Contextual Memory and Advanced Data Handling

Integrating memory management into Langchain applications significantly elevates their ability to deliver responses that are not only relevant but also deeply context-aware. This advancement permits an agent to draw upon past dialogues, providing a richer, more layered understanding of each interaction. The real power of this feature lies in its ability to tailor responses based on the accumulated history of the user's session, transforming standard interactions into personalized experiences that resonate more deeply with users.

The inclusion of memory capabilities, especially when combined with the functionality to expose object stores as agent tools, revolutionizes the landscape of AI-driven conversational agents. Developers are bestowed with the tools to create agents that not only execute tasks with unparalleled accuracy but also evolve and adapt to users' needs through ongoing interactions. This adaptability marks a leap forward in developing interactive applications, where the agent not only responds to but anticipates user requirements, crafting a truly interactive and intuitive user experience.

Moreover, this approach lays down a comprehensive blueprint for seamlessly merging MinIO's robust data management capabilities with Langchain's advanced processing power, offering a meticulously detailed guide for enhancing conversational agents. The result is a harmonious integration that leverages the strengths of MinIO and Langchain, offering developers a rich palette for creating applications that are as technically profound as they are user-centric.

Setting Up the Environment

It's crucial to begin by setting up the development environment with all necessary packages. This ensures that you have all the required libraries and dependencies installed.

First install two key packages: the MinIO Python SDK and Langchain. The MinIO SDK is a powerful tool that allows us to interact with MinIO buckets, enabling operations such as file uploads, downloads, and bucket management directly from our Python scripts. On the other hand, Langchain is an innovative framework that enables the creation of applications combining large language models with specific tasks, such as file management in this case.

Together, these packages form the backbone of our tool, allowing us to leverage the strengths of both MinIO's robust storage solutions and the advanced natural language processing capabilities of large language models.

To install these packages, run the following command in your terminal:

pip install -q -U minio "langchain[all]"Install package dependencies

This command installs the latest versions of the MinIO client and Langchain, along with all optional dependencies required for Langchain.

Integrating Langsmith for Process Monitoring and Tracing (Optional)

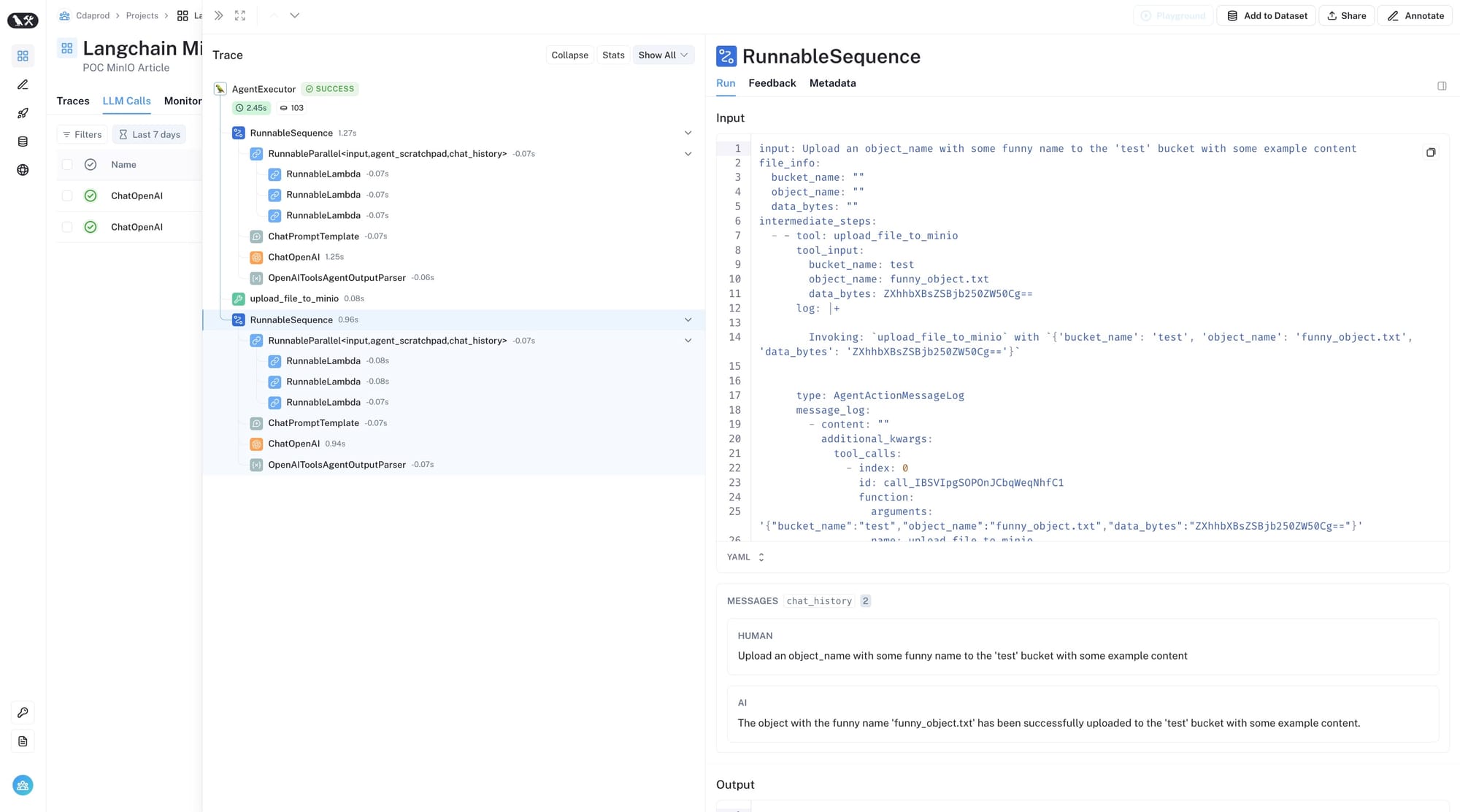

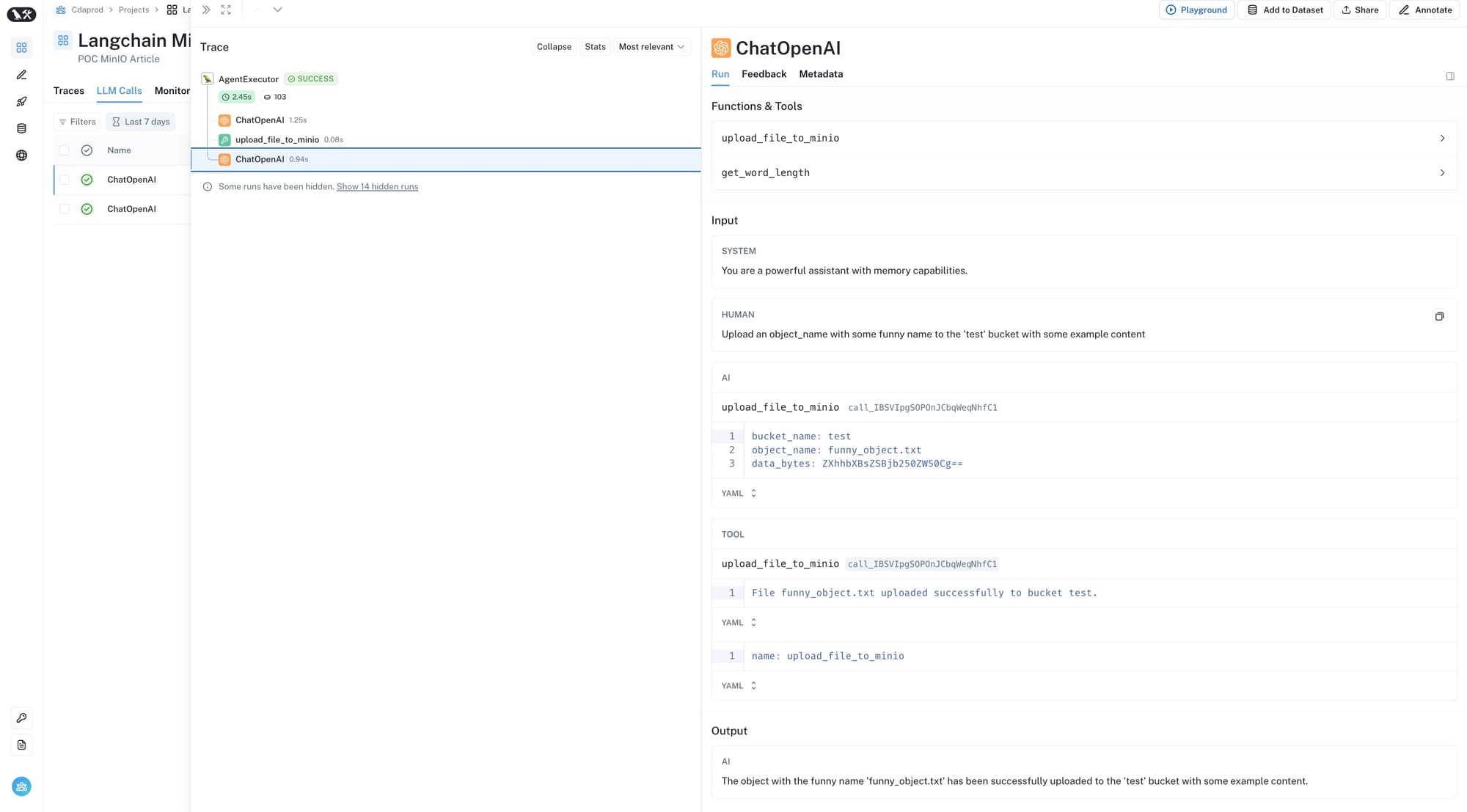

A key aspect of developing with Langchain is the ability to monitor and trace the execution of tasks, especially when integrating complex functionalities like object storage operations with MinIO. Langsmith offers an intuitive platform to visualize these processes, providing real-time insights into the performance and efficiency of your Langchain applications. Below, we’ve included screenshots from Langsmith that highlight the seamless execution of tasks, from invoking LLMs to performing specific actions such as file uploads and data processing.

These visual aids not only serve to demystify the underlying processes but also showcase the practical application of Langchain and MinIO SDK in creating sophisticated, AI-driven tools. Through Langsmith, developers can gain a deeper understanding of their application’s behavior, making it easier to optimize and refine their solutions for better performance and user experience.

RunnableSequence within Langchain, captured through Langsmith.

ChatOpenAI LLM, tool-use, prompting, and more, visualized through Langsmith.Incorporating Langsmith into your development workflow not only enhances transparency but also empowers you to build more reliable and efficient Langchain applications. By leveraging these insights, you can fine-tune your applications, ensuring they meet the high standards required for production environments.

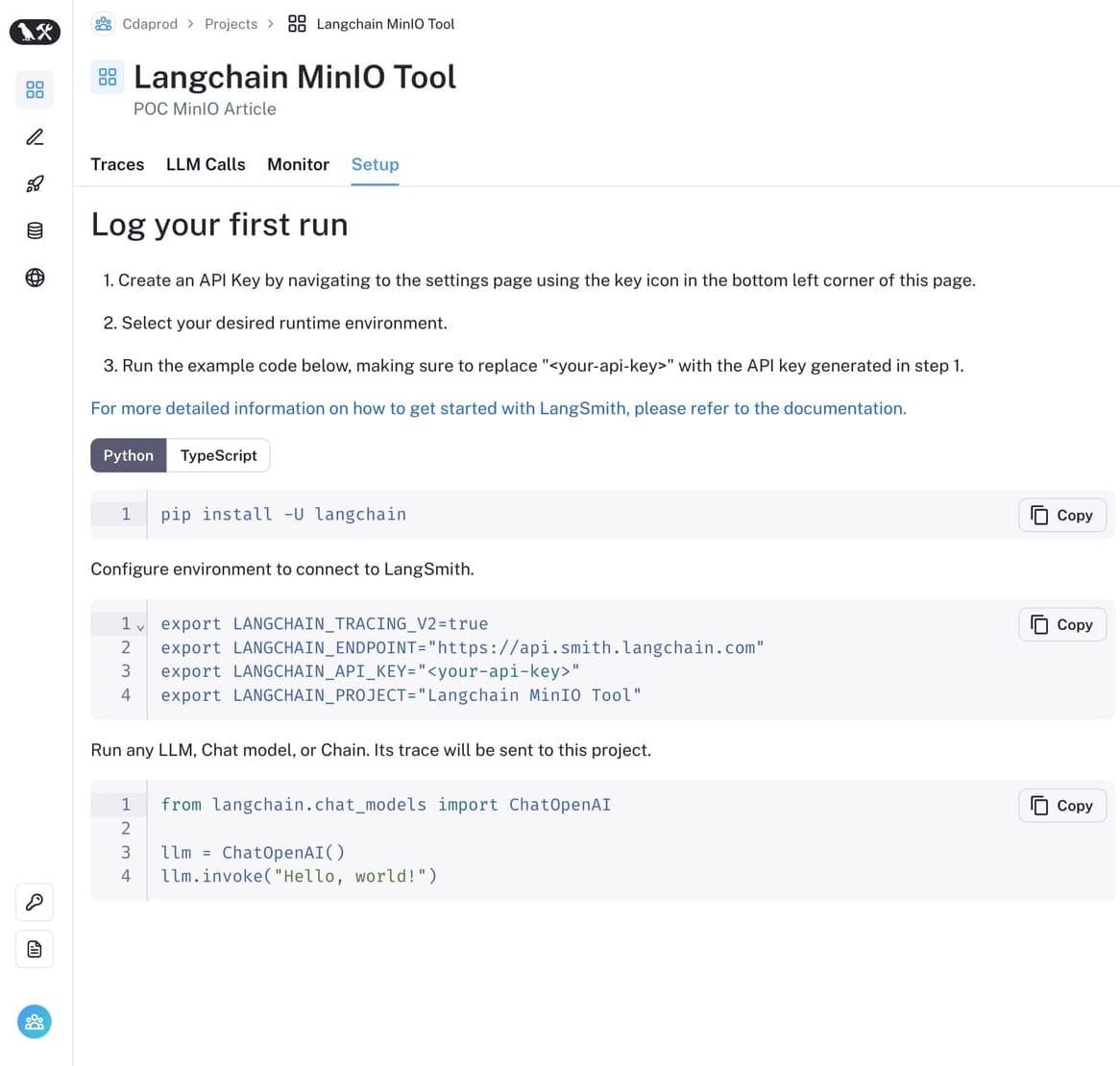

To get started with Langsmith, follow these steps:

1. Create a Langsmith Project: Visit smith.langchain.com and sign up or log in to your account. Once logged in, create a new project by selecting the option to create a new project and name it “Langchain MinIO Tool”. This project will be the central hub for monitoring the interactions and performance of your Langchain integrations.

2. Generate an API Key: After creating your project, navigate to the project settings to generate a new API key. This key will authenticate your application's requests to Langsmith, ensuring secure communication between your tool and the Langsmith service.

3. Configure Environment Variables: Langsmith requires several environment variables to be set up in your development environment. These variables enable your application to communicate with Langsmith's API and send tracing data.

An example of these variables includes:

export LANGCHAIN_TRACING_V2=true

export LANGCHAIN_ENDPOINT="https://api.smith.langchain.com"

export LANGCHAIN_API_KEY="<your-api-key>"

export LANGCHAIN_PROJECT="Langchain MinIO Tool"Exporting environment variables for Langsmith

Replace <your-api-key> with the actual API key generated in the previous step. These environment variables enable the Langchain SDK in your application to send tracing and monitoring data to your Langsmith project, providing you with real-time insights into the operation and performance of your Langchain integrations.

Integrating Langsmith is an optional but recommended step for those looking to maximize the efficiency and reliability of their Langchain applications. It provides valuable tools for performance monitoring, debugging, and optimization, ensuring your Langchain MinIO Tool operates at its best.

Initializing OpenAI and MinIO Clients for File Management

The foundation of building a Langchain tool that integrates with MinIO for file uploads involves setting up the clients for both OpenAI and MinIO. This setup allows your application to communicate with OpenAI's powerful language models and MinIO's efficient file storage system. Here's how you can initialize these crucial components in Python:

Setting Up the Language Model with OpenAI

First, we need to initialize the language model using OpenAI's API. This step involves using the langchain_openai package to create an instance of ChatOpenAI, which will serve as our interface to OpenAI's language models. This requires an API key from OpenAI, which you can obtain from your OpenAI account.

from langchain_openai import ChatOpenAI

llm = ChatOpenAI(api_key="")Setup llm using ChatOpenAI

Replace the empty string in (api_key="") with your actual OpenAI API key. This key enables authenticated requests to OpenAI, allowing you to leverage the language model for processing and generating text.

Importing Necessary Libraries

Before proceeding, ensure you import the necessary libraries. These imports include io for handling byte streams and tool from langchain.agents, which is a decorator used to register functions as tools that can be utilized by Langchain agents.

import io

from langchain.agents import toolImporting io and langchain.agents tool

Initializing the MinIO Client

Next, we initialize the MinIO client, which allows our application to interact with MinIO buckets for operations like uploading, downloading, and listing files. The MinIO client is initialized with the server endpoint, access key, secret key, and a flag to indicate whether to use a secure connection (HTTPS).

from minio import Minio

from minio.error import S3Error

minio_client = Minio('play.min.io:443',

access_key='minioadmin',

secret_key='minioadmin',

secure=True)Setting the minio_client

In this example, we're using MinIO's play server (play.min.io:443) with the default credentials (minioadmin for both access and secret keys). In a production environment, you should replace these with your MinIO server details and credentials.

By initializing the OpenAI and MinIO clients, you set the stage for developing advanced tools that can interact with natural language processing models and manage files in MinIO buckets, opening a wide range of possibilities for automating and enhancing file management tasks.

Managing Bucket Availability in MinIO

An essential part of working with MinIO involves managing buckets, which are the basic containers that hold your data. Before uploading files, it's important to ensure that the target bucket exists. This process involves checking the existence of a bucket and creating it if it doesn't exist. This approach not only prepares your environment for file operations but also avoids errors related to non-existent buckets.

Here's a simple yet effective helper function and code snippet for managing the availability of a bucket in MinIO:

bucket_name = "test"

try:

# Check if bucket exists

if not minio_client.bucket_exists(bucket_name):

# Create the bucket because it does not exist

minio_client.make_bucket(bucket_name)

print(f"Bucket '{bucket_name}' created successfully.")

else:

print(f"Bucket '{bucket_name}' already exists.")

except S3Error as err:

print(f"Error encountered: {err}")Bucket helper function

This code performs the following operations:

1. Define the Bucket Name: It starts by specifying the name of the bucket you want to check or create, in this case, "test".

2. Check for Bucket Existence: It uses the bucket_exists method of the MinIO client to check if the bucket already exists.

3. Create the Bucket if Necessary: If the bucket does not exist, the make_bucket method is called to create a new bucket with the specified name.

4. Handle Errors: The operation is wrapped in a “try-except block” to catch and handle any S3Error exceptions that may occur during the process, such as permission issues or network errors.

By ensuring the bucket's existence before performing file operations, you can make your applications more robust and user-friendly, providing clear feedback and avoiding common pitfalls associated with bucket management in MinIO.

Implementing File Upload Functionality

Once the environment is configured and the necessary checks are in place to ensure that the target bucket exists, the next step is to implement the core functionality of uploading files to MinIO. This involves creating a function that takes the bucket name, the object name (file name within the bucket), and the file's binary data as inputs, and then uses the MinIO client to upload the file to the specified bucket.

Here's how you can define this upload function:

@tool

def upload_file_to_minio(bucket_name: str, object_name: str, data_bytes: bytes):

"""

Uploads a file to MinIO.

Parameters:

bucket_name (str): The name of the bucket.

object_name (str): The name of the object to create in the bucket.

data_bytes (bytes): The raw bytes of the file to upload.

"""

data_stream = io.BytesIO(data_bytes)

minio_client.put_object(bucket_name, object_name, data_stream, length=len(data_bytes))

return f"File {object_name} uploaded successfully to bucket {bucket_name}."Python “upload” function with Langchain’s @tool decorator.

Key Components of the Upload Function:

- Function Decorator (@tool): This decorator is used to register the function as a tool within the Langchain framework, making it callable as part of a Langchain workflow or process. It enables the function to be integrated seamlessly with Langchain agents and executors.

- Parameters: The function takes three parameters:

- bucket_name: The name of the MinIO bucket where the file will be uploaded.

- object_name: The name you wish to assign to the file within the bucket.

- data_bytes: The binary data of the file to be uploaded.

- Creating a Byte Stream: The binary data (data_bytes) is wrapped in a BytesIO stream. This is necessary because the MinIO client's put_object method expects a stream of data rather than raw bytes.

- Uploading the File: The put_object method of the MinIO client is called with the bucket name, object name, data stream, and the length of the data. This method handles the upload process, storing the file in the specified bucket under the given object name.

- Return Statement: Upon successful upload, the function returns a confirmation message indicating the success of the operation.

This upload function is a fundamental building block for creating applications that interact with MinIO storage. It encapsulates the upload logic in a reusable and easily callable format, allowing developers to integrate file upload capabilities into their Langchain applications and workflows efficiently.

Enhancing Functionality with RunnableLambda and Secondary Tools

After establishing the fundamental upload functionality, enhancing the system for broader integration and additional utility can further refine the tool's capabilities. This involves creating a RunnableLambda for the upload function and defining secondary tools that can be utilized within the same ecosystem. These steps not only extend the functionality but also ensure seamless integration with Langchain workflows.

Creating a RunnableLambda for File Upload

Langchain's architecture supports the encapsulation of functions into runnables, which can be seamlessly executed within its framework. To facilitate the execution of the upload function within Langchain workflows, we wrap it in a RunnableLambda. This allows the function to be easily integrated with Langchain's agents and executors, enabling automated and complex workflows that can interact with MinIO.

from langchain_core.runnables import RunnableLambda

upload_file_runnable = RunnableLambda(upload_file_to_minio)Wrap upload_file_runnable with Langchain’s RunnableLambda

The RunnableLambda takes our upload_file_to_minio function and makes it readily usable within Langchain's system, enhancing the tool's interoperability and ease of use within different parts of the application.

Incorporating Secondary Functions for Extended Functionality

In our exploration of integrating MinIO with Langchain, we've primarily focused on the core functionality of uploading files. However, the versatility of Langchain allows for the incorporation of a wide range of functionalities beyond just file management. To illustrate this flexibility, we've included an example of a secondary function, get_word_length, directly inspired by the examples found in the Langchain documentation. This serves to demonstrate how easily additional functions can be integrated into your Langchain projects.

The inclusion of the get_word_length function is intended to showcase the process of adding more functionalities to your Langchain tool.

Here's a closer look at how this secondary tool is defined:

@tool

def get_word_length(word: str) -> int:

"""Returns the length of a word."""

return len(word)Python “secondary” function with Langchain’s @tool decorator

This function, marked with the @tool decorator, is a simple yet effective demonstration of extending your tool's capabilities. By registering this function as another tool within the Langchain framework, it becomes callable in a similar manner to the file upload functionality, showcasing the ease with which developers can enrich their applications with diverse capabilities.

The process of adding this function, taken from Langchain's documentation, is not only a testament to the ease of extending your application's functionality but also highlights the importance of leveraging existing resources and documentation to enhance your projects. This approach encourages developers to think beyond the immediate requirements of their projects and consider how additional features can be integrated to create more versatile and powerful tools.

These enhancements demonstrate the system's capability to not only perform core tasks like file uploads but also execute additional functionalities, all integrated within the Langchain framework. The inclusion of a RunnableLambda and the introduction of secondary tools exemplify how developers can build a rich ecosystem of functions that work together to automate and streamline processes, leveraging both Langchain and MinIO's robust features.

Crafting Interactive Chat Prompts with Langchain

As we delve deeper into the integration of MinIO with Langchain, a crucial aspect is designing an interactive experience that leverages the capabilities of both the MinIO upload tool and any additional tools we've integrated. This is where the creation of a ChatPromptTemplate becomes essential. It serves as the blueprint for the interactions between the user and the system, guiding the conversation flow and ensuring that the user's commands are interpreted correctly and efficiently.

Creating a ChatPromptTemplate

The ChatPromptTemplate is a powerful feature of Langchain that allows developers to pre-define the structure of chat interactions. By specifying the roles of the system and the user within the chat, along with placeholders for dynamic content, we can create a flexible yet controlled dialogue framework. Here's how you can create a chat prompt template that incorporates the functionality of our tools:

from langchain_core.prompts import ChatPromptTemplate, MessagesPlaceholder

prompt = ChatPromptTemplate.from_messages([

("system", "You are a powerful assistant equipped with file management capabilities."),

("user", "{input}"),

MessagesPlaceholder(variable_name="agent_scratchpad"),

])Importing and defining prompt using ChatPromptTemplate and MessagePlaceholder

In this template:

- System Message: The first message from the "system" sets the context for the interaction, informing the user (or the language model assuming the user's role) that they are interacting with an assistant that possesses file management capabilities. This helps to frame the user's expectations and guide their queries or commands.

- User Input Placeholder: The "user" key is followed by "{input}", which acts as a placeholder for the user's actual input. This dynamic insertion point allows the system to adapt to various user commands, facilitating a wide range of file management tasks.

- MessagesPlaceholder for Agent Scratchpad: The MessagesPlaceholder with the variable name "agent_scratchpad" is a dynamic area within the chat where additional information, such as the results of tool executions or intermediate steps, can be displayed. This feature enhances the interactivity and responsiveness of the chat, providing users with feedback and results in real-time.

This step in setting up the chat prompt template is pivotal for creating an engaging and functional user interface for our Langchain application. It not only structures the interaction but also seamlessly integrates the functionalities of our MinIO upload tool and any additional tools, making them accessible through natural language commands within the chat environment.

Binding Tools to the Language Model for Enhanced Functionality

A pivotal step in harnessing the full potential of our Langchain application is the integration of our custom tools with the language model. This use of “custom tools”, and similar “tool-use” integrations, allows the language model to not only understand and generate natural language but also to execute specific functionalities, such as uploading files to MinIO or calculating the length of a word. By binding these tools directly to the language model, we create a powerful, multifunctional system capable of processing user inputs and performing complex tasks based on those inputs.

How to Bind Tools to the Language Model

Binding tools to the language model (LLM) involves creating a list of the functions we've defined as tools and using the bind_tools method provided by Langchain's ChatOpenAI class. This method associates our tools with a particular instance of the language model, making them callable as part of the language processing workflows. Here's how this can be achieved:

tools = [upload_file_to_minio, get_word_length]

llm_with_tools = llm.bind_tools(tools)Binding the list of functions to the LLM

In this code snippet:

- Tools List: We start by defining a list named tools that contains the functions we've decorated with @tool. This includes our upload_file_to_minio function for uploading files to MinIO and the get_word_length function as an example of a secondary tool. You can add more tools to this list as needed.

- Binding Tools: The bind_tools method takes the list of tools and binds them to the llm instance. This creates a new language model instance, llm_with_tools, which has our custom tools integrated into it. This enhanced language model can now interpret commands related to the functionalities provided by the bound tools, enabling a seamless interaction between natural language processing and task execution.

This process of binding tools to the language model is crucial for creating interactive and functional Langchain applications. It bridges the gap between natural language understanding and practical task execution, allowing users to interact with the system using conversational language to perform specific actions, such as file management in MinIO. This integration significantly expands the capabilities of Langchain applications, paving the way for innovative uses of language models in various domains.

Implementing Memory Management for Enhanced User Interaction

Creating an engaging and intuitive Langchain application requires more than just processing commands—it demands a system that can remember and learn from past interactions. This is where memory management becomes essential, enabling the application to maintain context across conversations, which is particularly useful for handling complex queries and follow-up questions.

Establishing the Agent and Executor Framework

At the heart of our application lies the AgentExecutor, a sophisticated mechanism designed to interpret user inputs, manage tasks, and facilitate communication between the user and the system. To set this up, we need to incorporate several key components from Langchain:

from langchain.agents import AgentExecutor

from langchain.agents.format_scratchpad.openai_tools import format_to_openai_tool_messages

from langchain.agents.output_parsers.openai_tools import OpenAIToolsAgentOutputParserImporting AgentExecutor, openai_tools, and OpenAIToolsAgentOutputParser

This foundational setup ensures our application has the necessary tools to execute tasks, interpret the language model's output, and apply our custom functionalities effectively.

Memory Management Integration

To enrich the user experience with context-aware responses, we update our chat prompt template to include memory management features. This involves adding placeholders for storing and referencing the chat history within our conversations:

from langchain_core.messages import AIMessage, HumanMessage

from langchain_core.prompts import ChatPromptTemplate, MessagesPlaceholder

prompt = ChatPromptTemplate.from_messages([

("system", "You are a powerful assistant with memory capabilities."),

MessagesPlaceholder(variable_name="chat_history"),

("user", "{input}"),

MessagesPlaceholder(variable_name="agent_scratchpad"),

])

chat_history = []Refactoring prompt and agent_scratchpad with empty chat_history list

This adjustment enables our application to dynamically incorporate previous interactions into the ongoing conversation, ensuring a cohesive and context-rich dialogue with the user.

Refining the Agent with Contextual Awareness

To fully leverage the benefits of memory management, we refine our agent's definition to incorporate chat history actively. This involves defining specific behaviors for handling inputs, managing the agent's scratchpad, and incorporating the chat history into the agent's decision-making process:

agent = (

{

"input": lambda x: x["input"],

"agent_scratchpad": lambda x: format_to_openai_tool_messages(x["intermediate_steps"]),

"chat_history": lambda x: x["chat_history"],

}

| prompt

| llm_with_tools

| OpenAIToolsAgentOutputParser()

)

agent_executor = AgentExecutor(agent=agent, tools=tools, verbose=True)Define agent chain and agent_executor

In this enhanced agent setup:

- Custom Lambda Functions: The agent utilizes lambda functions to handle the user's input, manage intermediate steps stored in the agent's scratchpad, and seamlessly integrate the chat history into the conversation flow.

- Agent Pipeline Assembly: By chaining the components with the pipeline operator (|), we create a streamlined process that takes the user's input, enriches it with contextual history, executes the necessary tools, and interprets the output for actionable responses.

- Execution with AgentExecutor: The AgentExecutor is initialized with our context-aware agent and the predefined tools, equipped with verbose logging for detailed operational insights.

Executing File Uploads with Contextualized Agent Interactions

Integrating MinIO file uploads into a Langchain-based application offers a practical example of how conversational AI can streamline complex operations. This capability becomes even more powerful when combined with memory management, allowing the agent to maintain context and manage tasks like file uploads dynamically. Here’s how to set up and execute a file upload process, demonstrating the application's ability to interpret and act on user commands.

Defining the User Prompt and File Information

First, we establish a user prompt that instructs the system on the desired action—in this case, uploading a file with specific parameters. Alongside this, we define the structure for the file information, including the bucket name, object name, and the data bytes of the file to be uploaded:

input1 = "Upload a text object with some funny name to the 'test' bucket with some example content"

file_info = {

"bucket_name": "",

"object_name": "",

"data_bytes": b""

}input1 is where we define our user prompt as a string.

This setup not only specifies what the user wants to do but also encapsulates the necessary details for the MinIO upload operation in a structured format.

The Agent Execution

With the prompt and file information defined, we proceed to simulate the execution of the file upload command through our agent. This simulation involves invoking the agent_executor with the specified input, including the chat history to maintain conversational context:

result = agent_executor.invoke({"input": input1, "chat_history": chat_history, "file_info": file_info})

chat_history.extend([

HumanMessage(content=input1),

AIMessage(content=result["output"]),

])Invoking our agent_executor with input1 and chat_history

In this process:

- Invocation of Agent Executor: The agent_execution is called with a dictionary containing the user's input, the current chat history, and the structured file information. This approach allows the agent to understand the command within the context of the conversation and access the necessary details for the file upload operation.

- Updating Chat History: After executing the command, we update the chat history with the new interaction. This includes recording the user's input as a HumanMessage and the system's response as an AIMessage. This step is crucial for maintaining an accurate and comprehensive record of the conversation, ensuring that context is preserved for future interactions.

This example illustrates the seamless integration of conversational AI with cloud storage operations, showcasing how a Langchain application can interpret natural language commands to perform specific tasks like file uploads. By maintaining a conversational context and dynamically managing file information, the system offers a user-friendly and efficient way to interact with cloud storage services, demonstrating the practical benefits of combining AI with cloud infrastructure.

Streamlining File Uploads with Conversational Prompts

One of the remarkable features of integrating Langchain with MinIO for file uploads is the flexibility it offers in handling user requests. While the detailed approach of specifying file_info directly provides clarity and control, the system is also designed to understand and extract necessary information from conversational prompts. This means users can initiate file uploads without explicitly filling out the file_info structure, simply by conveying all required information within the input1 prompt.

Simplifying File Upload through Natural Language

By crafting a detailed prompt like input1, users can communicate their intent and provide all necessary details for the file upload in a single message. The system's underlying intelligence, powered by Langchain's processing capabilities, parses this input to extract actionable data such as the bucket name, object name, and content. This approach mimics natural human communication, making the interaction with the application more intuitive and user-friendly.

input1 = "Upload a text object named 'funny_name.txt' with 'This is some example content.' to the 'test' bucket."Example of a Conversational Prompt

This prompt succinctly communicates the user's intent, specifying the object name, content, and target bucket, all within a natural language sentence. The application then processes this prompt, dynamically generating the equivalent file_info needed for the upload operation.

Leveraging the file_input Method for Programmatic Execution

For scenarios where the application is being used programmatically or when automating tasks as part of a larger workflow, expanding upon the file_input method becomes invaluable. This method allows for a more structured approach to specifying file details, making it ideal for situations where prompts are generated or modified by other parts of an application.

The flexibility to switch between conversational prompts and programmatically specified file_info showcases the adaptability of the Langchain and MinIO integration. It enables developers to tailor the file upload process to suit the needs of their application, whether they are aiming for the simplicity of natural language interaction or the precision of programmatically defined tasks.

The ability to run file uploads through Langchain without manually filling out the file_info strings, relying instead on the richness of natural language prompts, significantly enhances the usability and accessibility of the application. This feature, combined with the option to use the file_input method for more structured command chains, exemplifies the system's versatility in catering to diverse user needs and operational contexts.

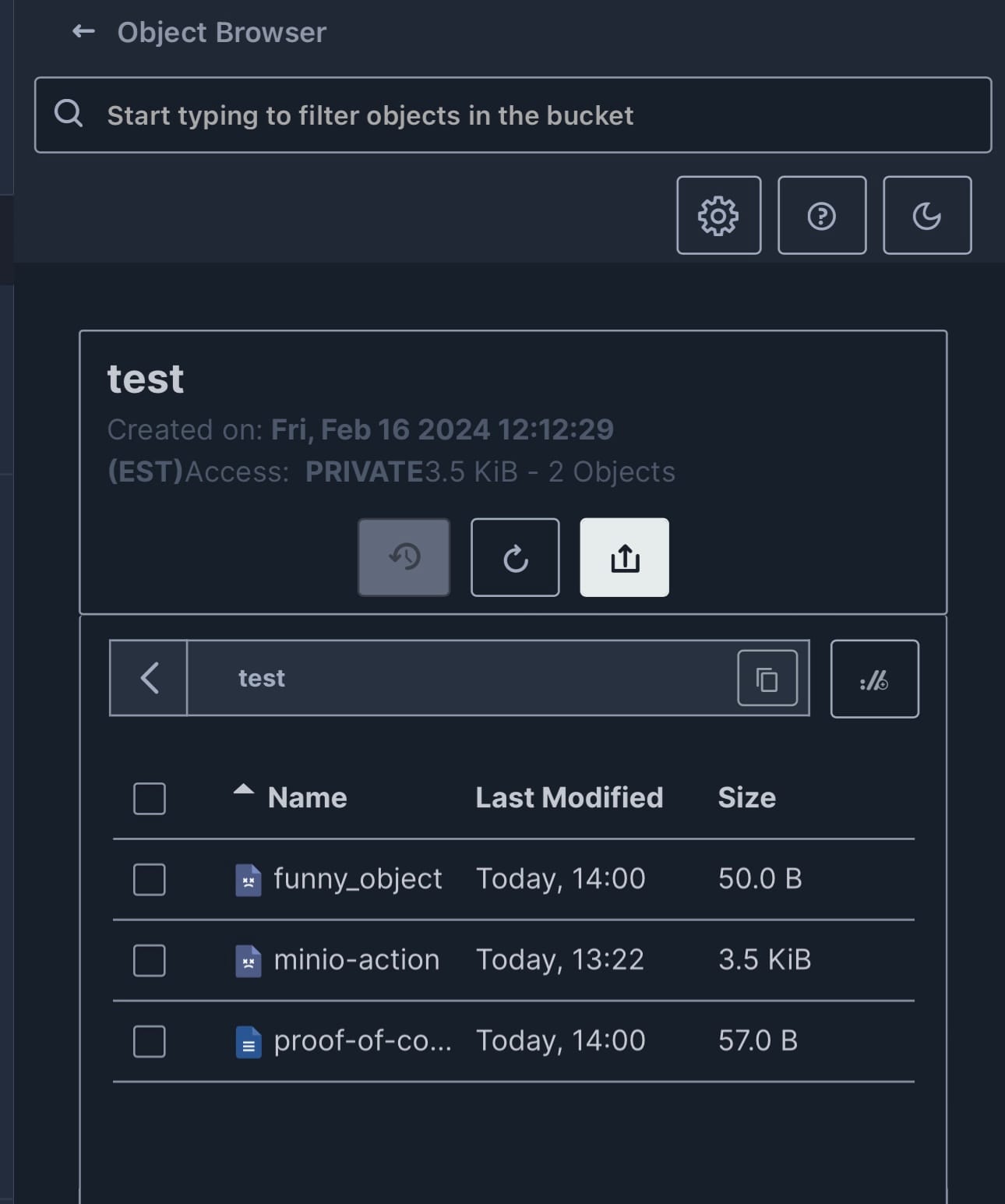

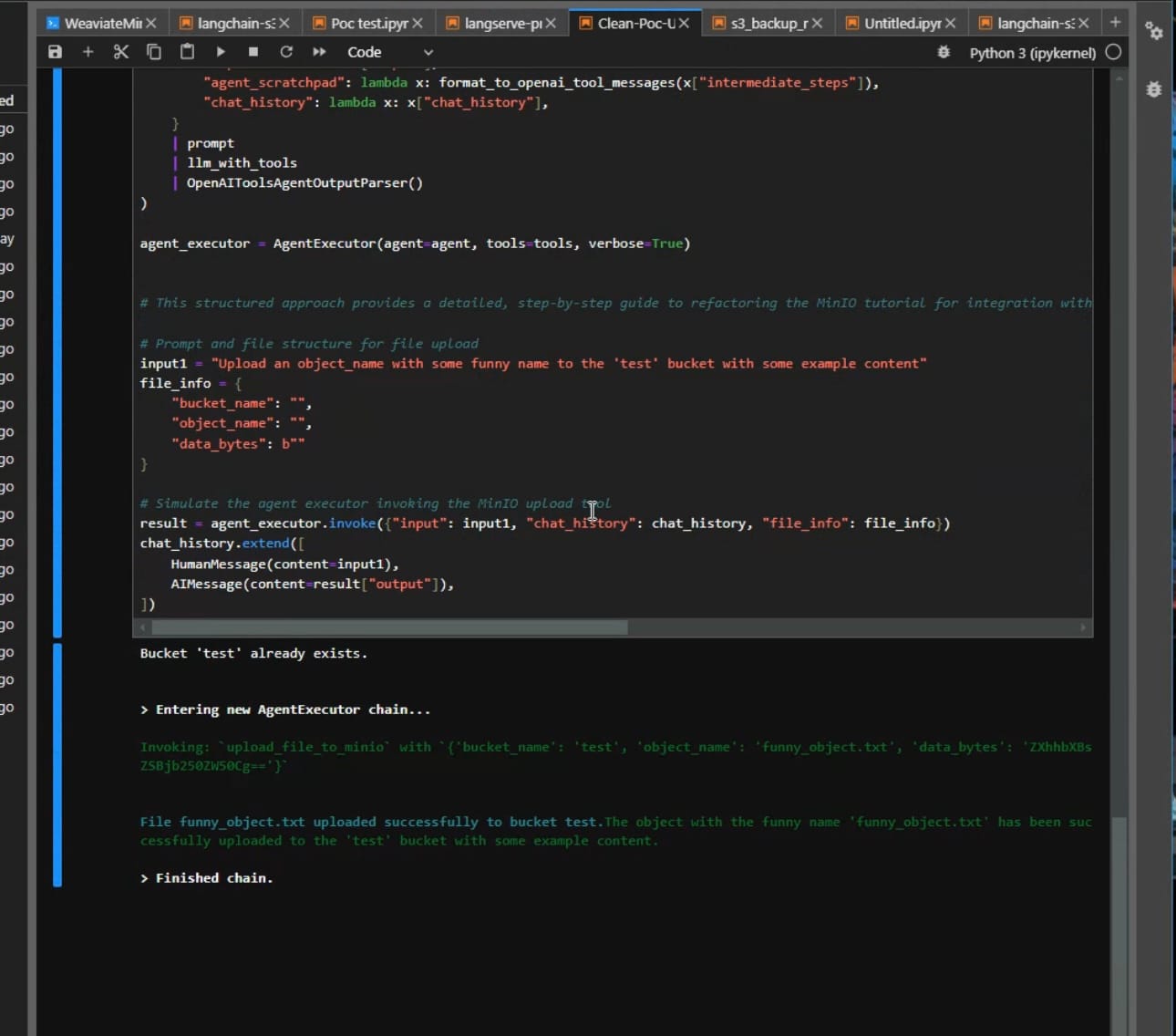

Bringing It All Together: The Proof in the Picture.

As we reach the culmination of our journey through Langchain's capabilities and the MinIO SDK, it's time to reflect on the tangible outcomes of our work. The power of Langchain is not just in its ability to facilitate complex tasks but also in its tangible, visible results, which we can observe in the provided screenshots.

The first screenshot offers a clear view of the MinIO bucket, showcasing the objects that have been successfully created as a result of our LLM tool-use and agent interactions. The objects listed, including "funny_object", "minio-action", and "proof-of-concept", serve as a testament to the practical application of our guide. This visual evidence underscores the effectiveness of using Langchain to manage and organize data within a robust object storage system like MinIO.

In the second screenshot, we witness the Langchain agent in action. The trace of the AgentExecutor chain details the invocation steps, clearly marking the success of each task. Here, we see the sequence of the agent's operation, from the initiation of the chain to the successful execution of the file upload. This output provides users with a transparent view into the process, offering assurance and understanding of each phase in the operation.

AgentExecutor Chain in Jupyter NotebookTogether, these visuals not only serve as proof of concept but also illustrate the seamless integration and interaction between the Langchain agents, LLMs, and MinIO storage. As developers and innovators, these insights are invaluable, providing a clear path to refine, optimize, and scale our applications. This is the true beauty of combining these technologies: creating a world where conversational AI meets practical execution, leading to efficient and intelligent tool-use that pushes the boundaries of what we can achieve with modern technology.

Embracing the Future: Intelligent Automation with Langchain and MinIO

The interplay between Langchain’s sophisticated language understanding and MinIO’s robust object storage has been vividly demonstrated through practical examples and visual evidence. The journey from conceptual explanations to the execution of real-world tasks has illustrated the transformative potential of LLMs when they are finely tuned and integrated with cloud-native technologies. The resulting synergy not only streamlines complex data operations but also enriches the user experience, providing intuitive and intelligent interactions with digital environments.

As we reflect on the capabilities of Langchain agents and MinIO SDK, the future of tool development with LLMs looks promising, brimming with opportunities for innovation and efficiency. Whether you are a seasoned developer or a curious newcomer, the path forward is clear: the integration of AI with cloud storage is not just a leap towards more advanced applications but a stride into a new era of intelligent automation. With the right tools and understanding, there is no limit to the advancements we can make in this exciting domain.

We are excited to accompany you on your journey with Langchain and MinIO. As you navigate through the intricacies of these technologies, know that our community is here to support you. Connect with us on Slack for any queries or to share your progress. We’re always ready to help or simply celebrate your successes.

For those who’ve made it this far and are eager to dive into the practical implementation, don’t forget to check out the accompanying notebook for a hands-on experience; access the notebook here.

Here’s to crafting the future, one innovative solution at a time!